A financial services company hosts its data warehouse on a Hadoop cluster located in an on-premises data center. The data is 300 TB in size and grows by 1 TB each day. The data is generated in real time from the company's trading system. The raw data is transformed at the end of the trading day using a custom tool running on the Hadoop cluster.

The company is migrating its data warehouse to AWS using a managed data warehouse product provided by a third party that can ingest data from Amazon S3. The company has already established a 10 Gbps connection with an AWS Region using AWS Direct Connect. The company is required by its security and regulatory compliance policies to not transfer data over the public internet. The company wants to minimize changes to its custom tool for data transformation. The company also plans to eliminate the on-premises Hadoop cluster after the migration.

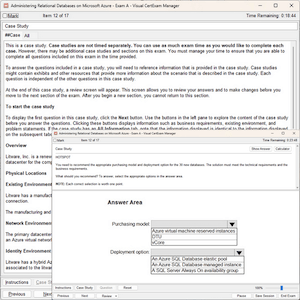

Which solution MOST cost-effectively meets these requirements?

A. Create a VPC endpoint for Amazon S3. Run a one-time copy job using the DistCp tool to copy existing files from Hadoop to a target S3 bucket over the VPC endpoint Schedule a nightly DistCp job on the Hadoop cluster to copy the incremental files produced by the custom tool to the target S3 bucket

B. Create a VPC endpoint for Amazon S3. Run a one-time copy job using the DistCp tool to copy existing files from Hadoop to a target S3 bucket over the VPC endpoint. Schedule a nightly job on the trading system servers that produces raw data to copy the incremental raw files to the target S3 bucket. Run the data transformation tool on a transient Amazon EMR cluster to output files to Amazon S3.

C. Create a VPC endpoint for Amazon S3. Run a one-time copy job using the DistCp tool to copy existing files from Hadoop to a target S3 bucket over the VPC endpoint. Set up an Amazon Kinesis data stream to ingest raw data from the trading system in real time. Use Amazon Kinesis Data Analytics to transform the raw data and output files to Amazon S3.

D. Complete a one-time transfer of the data using AWS Snowball Edge devices transferring to a target S3 bucket. Schedule a nightly job on the trading system servers that produces raw data to copy the incremental raw files to the target S3 bucket Run the data transformation tool on a transient Amazon EMR cluster to output files to Amazon S3.